MIT Computer Science and Artificial Intelligence Laboratory

HIGHLIGHTS

Scene Chronology

- We present a new method for taking an urban scene reconstructed from a large Internet photo collection and reasoning about its change in appearance through time. Our method estimates when individual 3D points in the scene existed, then uses spatial and temporal affinity between points to segment the scene into spatio-temporally consistent clusters. The result of this segmentation is a set of spatio-temporal objects that often correspond to meaningful units, such as billboards, signs, street art, and other dynamic scene elements, along with estimates of when each existed. Our method is robust and scalable to scenes with hundreds of thousands of images and billions of noisy, individual point observations. We demonstrate our system on several large-scale scenes, and demonstrate an application to time stamping photos. Our work can serve to chronicle a scene over time, documenting its history and discovering dynamic elements in a way that can be easily explored and visualized.

A montage of a planar region of the 5 Pointz dataset, showing how this region has changed over time as different pieces of art have been overlaid atop one another.

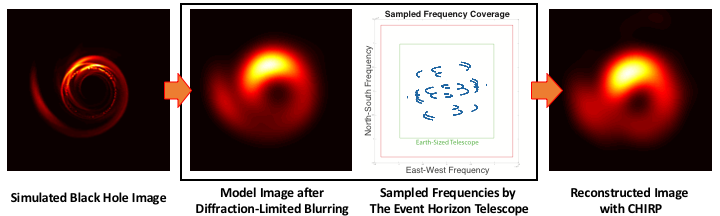

Computational Imaging for VLBI Image Reconstruction

- It is believed that the heart of the Milky Way hosts a four million solar mass black hole feeding off a spinning disk of hot gas. An image of the shadow cast by the event horizon of the black hole could help to address a number of important scientific questions; for instance, does Einstein’s theory of general relativity hold in extreme conditions? Unfortunately, the event horizon of this black hole appears so small in the sky that imaging it would require a single-dish radio telescope the size of the Earth. Although a single-dish telescope this large is unrealizable, by connecting disjoint radio telescopes located all around the globe, we are working with a team (the Event Horizon Telescope - EHT) that is creating an Earth-sized computational telescope capable of taking the very first picture of a black hole’s shadow. Measurements from this computational telescope are incredibly sparse and noisy, causing traditional astronomical imaging methods to fail. By combining techniques from both astronomy and computer science, we have been able to develop innovative ways to robustly reconstruct the underlying images using data from this telescope network. We have demonstrated the success of our method is on realistic synthetic experiments as well as publicly available real data. We have also presented this problem in a way that is accessible to members of the computer vision community, and provide a dataset website vlbiimaging.csail.mit.edu) that facilitates controlled comparisons across algorithms.

Example reconstruction of a black hole image with the Event Horizon Telescope. The left image shows a numerical simulation of the black hole in M87, and the center image shows how this simulated image would appear to a conventional telescope that was even larger than the Earth. The EHT only samples a small number of spatial frequencies, shown as the blue dots in the next panel. For comparison, the green square represents what frequencies would be measured using an Earth-sized telescope. The rightmost image shows a reconstructed image with our method using only synthetic observations with the EHT.

Deviation Magnification: Revealing Geometric Deviation in a Single Image

- Structures and objects are supposed to have idealized geometries such as straight lines or circles. Although not always visible to the naked eye, in reality, physical objects deviate from their idealized models. Our goal is to reveal and visualize such subtle geometric deviations, which can contain useful, surprising information about our world. Our framework, termed "Deviation Magnification", takes a still image as input, fits a parametric model to an object of interest, computes the geometric deviations, and renders an output image in which the deviations are exaggerated. To deal with subtle sub-pixel deviations, we address several significant challenges including changes in texture in the vicinity of the shape contour, sampling, lens distortions, and spatial aliasing. We demonstrate the usefulness of our method through quantitative evaluation with synthetic datasets and also by application to challenging natural images.

Revealing the sagging of a house’s roof from a single image. A perfect straight line marked by by p1 and p2 is automatically fitted to the house’s roof in the input image (a). Our algorithm analyzes and amplifies the deviation from the geometric deviation from straight revealing the sagging of the roof in (b). View II shows a consistent result of our method (d) using the input image (c). Each viewpoint was processed completely independently.

The Aperture Problem for Refractive Motion

- When viewed through a small aperture, a moving image provides incomplete information about the local motion. Only the component of motion along the local image gradient is constrained. In an essential part of optical flow algorithms, information must be aggregated from nearby image locations in order to estimate all components of motion. This limitation of local evidence for estimating optical flow is called “the aperture problem”.

- In this work we have posed and solved a generalization of the aperture problem of moving refractive elements. We consider a common setup in air flow imaging or telescope observation: a camera is viewing a static background through an unknown refractive elements We then ask the fundamental question: what does the local image information tell us about the motion of refractive elements? We have developed and verified our theory on sequences of both solid and fluid refractive objects.

The aperture problem for an opaque object. (a) A camera is imaging an opaque moving object (gray). (b) When a vertical edge is observed within the aperture (the white circular mask), we can resolve the horizontal component of the motion. (c) The vertical component of the motion is ambiguous, because when the object moves vertically, no change is observed through this aperture. The aperture problem for a refractive object. (d) A camera is viewing a stationary and planar background (white and red) through a moving Gaussian-shaped glass (blue). (e) The horizontal motion is ambiguous, because the observed sequence is symmetric. That is, if the glass moves in the opposite direction, the same sequence will be observed. (f) The vertical motion can be recovered, e.g. by tracking the observed tip of the bump.

Estimating the Material Properties of Fabric from Video

- We predict a fabric’s intrinsic material properties using a set of statistical features extracted from a video of fabric moving due to an unknown wind force. The success of the model is demonstrated on a newly collected, publicly available database of fabric videos with corresponding measured ground truth material properties. The model’s performance is also compared to human performance of the same task.

- Humans passively estimate the material properties of objects on a daily basis. For instance, from only an image or video you are often are able to identify if an object is hard or soft, rough or smooth, flexible or rigid. Designing a system to estimate these properties from a video is a difficult problem that is essential for automatic scene understanding. Such a system could be very useful for many applications such as robotics, video search, online shopping, material classification, material editing, and predicting objects’ behavior under different applied forces.

- We believe our dataset and algorithmic framework is the first attempt to passively estimate the material properties of deformable objects moving due to unknown forces from video. This work suggests that many physical systems with complex mechanics may generate image data that encodes their underlying intrinsic material properties in a way that is extractable by efficient discriminative methods.

A sample of the fabrics in our collected database ranked according to stiffness predicted by our model. The top panel shows physical fabric samples hanging from a rod. The bottom panel shows a horizontal space × time slice of a video when the fabrics are blown by the same wind intensity. Bendable fabrics generally contain more high frequency motion than stiff fabrics.

The Visual Microphone: Passive Recovery of Sound from Video

- When sound hits an object, it causes small vibrations of the object’s surface. Using only high-speed video of the object, we extract the minute vibrations and partially recover the sound that produced them, allowing us to turn everyday objects—a glass of water, a potted plant, a box of tissues, or a bag of chips—into visual microphones.

- Vibrations in objects due to sound have been used in recent years for remote sound acquisition, which has important applications in surveillance and security, such as eavesdropping on a conversation from afar. Existing approaches to acquire sound from surface vibrations at a distance are active in nature, requiring a laser beam or pattern to be projected onto the vibrating surface. We propose a passive method to recover audio signals using video. Our method visually detects small vibrations in an object responding to sound, and converts those vibrations back into an audio signal, turning visible everyday objects into potential microphones.

- Through our experiments, we found that light and rigid objects make especially good visual microphones. We believe that using video cameras to recover and analyze sound-related vibrations in different objects will open up interesting new research and applications.

Left: when sound hits an object (in this case, an empty bag of chips) it causes extremely small surface vibrations in that object. We are able to extract these small vibrations from high speed video and reconstruct the sound that produced them - using the object as a visual microphone from a distance. Right: an instrumental recording of ”Mary Had a Little Lamb” (top row) is played through a loudspeaker, then recovered from video of different objects: a bag of chips (middle row), and the leaves of a potted plant (bottom row)