|

|

SLS RESEARCH Multimodal Processing and Interaction

Multimodal Interaction Although speech and language are extremely natural and efficient, often a simple gesture can more easily communicate important information. For example, when displays are available on the interface, gestures can complement spoken input to produce a more natural and robust interaction. We are exploring a variety of multimodal scenarios to combine speech and gesture for more natural interaction. Our web-based prototypes run inside a conventional browser and transmit audio and pen or mouse gesture information to servers running at MIT. Thus, they are available anywhere a user is connected to the internet.

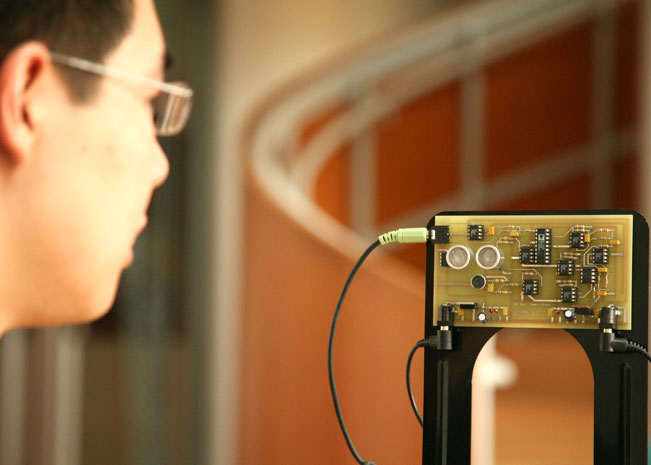

Multimodal Processing In addition to conveying linguistic information, the speech signal also contains cues about a talkers identity, their location, and their emotional (paralinguistic) state. Information about these attributes can also be found in the visual channel, as well as other untethered modalities. By combining conventional audio-based processing with additional modalities, improved performance can often be obtained, sometimes dramatically, especially in challenging audio environments. We are performing research in a number of areas related to person identification, and speech recognition. The goal is to obtain robust performance in a variety of environments, without unduly encumbering the talker.

Multimodal Generation Multimedia environments offer rich opportunities for conveying information to the user that are more difficult to communicate via an audio-only channel. In our current research, we are exploring interfaces that can transparently convey the current state of understanding from the computer side of the conversation. We are also exploring mechanisms for real-time feedback while the computer is listening to the talker. Finally, we are exploring audio-visual speech generation to complement more conventional information displays. The video below shows an example of a combination of video-realistic facial animation combined with concatenative speech synthesis.

Further Reading T. Hazen and D. Schultz, "Multi-Modal User Authentication from Video for Mobile or Variable-Environment Applications," Proc. Interspeech, Antwerp, Belgium, August 2007. (PDF) B. Zhu, T. Hazen, and J. Glass, "Multimodal Speech Recognition with Ultrasonic Sensors", Proc. Interspeech, Antwerp, Belgium, August 2007. (PDF) T. Hazen, K. Saenko, C. La and J. Glass, "A segment-based audio-visual speech recognizer: Data collection, development and initial experiments," Proc. ICMI, State College, PA, October, 2004. (PDF) T. Hazen, E. Weinstein, and A. Park, "Towards Robust Person Recognition on Handheld Devices Using Face and Speaker Identification Technologies," Proc. ICMI, Vancouver, November 2003. (PDF) |

32 Vassar Street Cambridge, MA 02139 USA (+1) 617.253.3049 |

|