Abstract

In

order to deliver the promise of Moore's Law to the end user,

compilers must make decisions that are intimately tied to a

specific target architecture. As engineers add architectural

features to increase performance, systems become harder to model, and thus,

it becomes harder for a compiler to make effective decisions.

Machine-learning techniques may be able to help compiler writers

model modern architectures. Because learning techniques can effectively

make sense of high dimensional spaces, they can be a valuable tool for clarifying

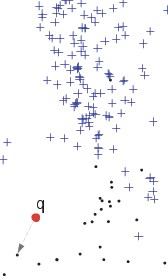

and discerning complex decision boundaries. In our work we focus on

loop unrolling, a well-known optimization for exposing instruction level

parallelism. Using the Open Research Compiler (ORC) as a test bed, we

demonstrate how one can use supervised learning techniques to model the appropriateness

of loop unrolling.

Papers

M.

Stephenson, S. Amarasinghe.

Predicting Unroll Factors Using Supervised Classification. In Proceedings of International

Symposium on Code Generation and Optimization. San Jose, California. March 2005 (ps, pdf, ppt).

M.

Stephenson, S. Amarasinghe.

Predicting Unroll Factors Using Nearest Neighbors. MIT-TM-938.

March 2004. (ps, pdf).

Software

We will soon release ORC instrumentation code,

as well as some Matlab implementations of the

learning algorithms we used.

People

Mark Stephenson

Una-May O'Reilly

Saman Amarasinghe

Related Links

Eliot Moss and John Cavazos at the

University of Massachusetts are working on

applying supervised learning to compilation.

|