|

|

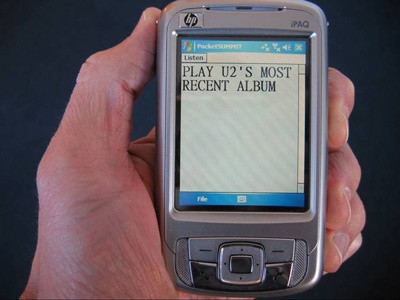

SLS RESEARCH Small Platform-based Information Access  As PDAs and cell-phones continue to shrink in size but expand in functionality, conventional menu and text-based input becomes increasingly cumbersome and thus spoken dialogue-based interactions will become crucial. Mobile interaction will have many applications for accessing and manipulating information, and can be used in many situations. Traditionally, SLS speech and language technologies have been designed to provide a flexible framework for incorporating new research ideas. They have been developed to run on Unix workstations, where significant processing power and memory are available, and have been used in a client-server type architecture. The relatively modest memory and processing resources available on handheld devices present interesting challenges to the more computational and memory intensive components. The initial handheld devices we have investigated have typically been 400MHz ARM processors, running either Linux or Windows, with no floating-point hardware, and with 32-64MB RAM. These limitations severely constrained our speech recognition component, so it was completely redesigned from scratch with these constraints in mind. The video below shows examples of speech recognition capabilities (in both English and Mandarin) that can run on handheld devices. We have also ported our language understanding and generation components to also work in these environments, and have begun to port our dialogue component. In addition to using a handheld as a stand-alone platform, we are also investigating hybrid scenarios whereby a mobile device can be used to interact with other devices in the neighboring environment, whether at home, work, or in the vehicle. For example, we are developing a prototype multimodal dialogue system for interacting with a home media server via a mobile device. In our working prototype, users may utilize both a graphical and speech user interface to search TV listings, record and play television programs, and listen to music. The developed framework is quite generic, potentially supporting a wide variety of applications, as we demonstrate by integrating a weather forecast application. In the prototype, the mobile device serves as the locus of interaction, providing both a small touch-screen display, and speech input and output; while the TV screen features a larger, richer GUI. The system architecture is agnostic to the location of the natural language processing components: a consistent user experience is maintained regardless of whether they run on a remote server or on the device itself.

Further Reading I. Hetherington, "PocketSUMMIT: Small-Footprint Continuous Speech Recognition", Proc. Interspeech, Antwerp, Belgium, August 2007. (PDF)

|

32 Vassar Street Cambridge, MA 02139 USA (+1) 617.253.3049 |

|