For details on the MPEG-2 format itself we suggest reading either the MPEG-2 video specification or the Wikipedia article on the subject.

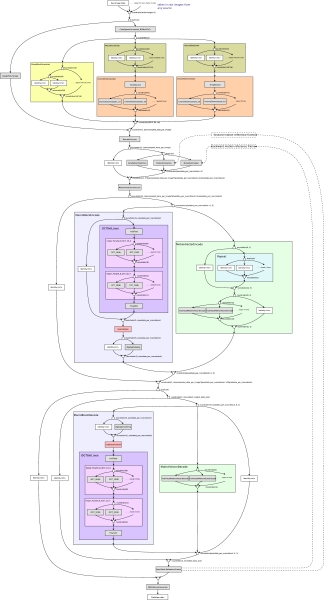

Both the decoder and the encoder are represented by a stream graph showing the filters, splitters, and joiners, which operate on the data. Both are described with respect to their block diagrams which show the flow of data through the computation.

The MPEG encoder, as one would expect, is something like the reverse of the decoder pipeline However, because of the lossy compression, the encoder also must incorporate most of the decoder so that it knows exactly what the decoder sees at its output. This is neccesary so that the reference frames used for motion compensation are identical; otherwise lossy errors would accumulate between frames.

The input to the encoder is a sequence of raw video frames. Initially the data is duplicated and split - one channel is passed to a filter which determines a picture type for the image, and the other channel downsamples the color channels based on the chrominance format. The resulting data is combined and a Picture Reorder filter rearranges the pictures so that each motion predicted image's reference frames have already been processed.

The bulk of the picture data is then triplicated, with one copy of the data being sent to one of three motion estimation filters. The first filter performs no motion estimation. The second and third filters each perform motion estimation with respect to a reference image. Each of the two motion estimators use a different reference image, one refering the preceeding key image, and the other referencing the second preceeding image. Thus, one provides forward estimation for P images and backward estimation for B images, and the other provides forward estimation for B images. The output of each of these filters is the vectors for the best motion estimate and the difference between the predicted value and the actual value of the macroblock.

Each of these three data streams is joined and a filter immediately after the joiner takes care of deciding which of the estimations to choose, based on which provides the best compression. Additionally, this filter takes care of determining the bidirectionally predicted block values in the case where both the forward and backward predictions are used.

Following this step, the motion vectors are encoded and the macroblock values coded in a process that reverses the decoder - the values are transformed via a DCT, quantized, and zig zag ordered. The output of this step is then duplicated, with one copy going to the bitstream variable length coder, and the other being inversely zig zag ordered, dequantized, and inversely transformed. This inverted copy is then sent upstream via a message to update the reference frames used by the motion estimation filters.